Go Beyond CSV: Data Ingestion with Pandas

16 Apr 2022 - Mala Deep Upadhaya

Mala Deep is a Business Intelligence Consultant, and community focused data citizen.

Go Beyond CSV: Data Ingestion with Pandas

Data will not always come to you in CSV

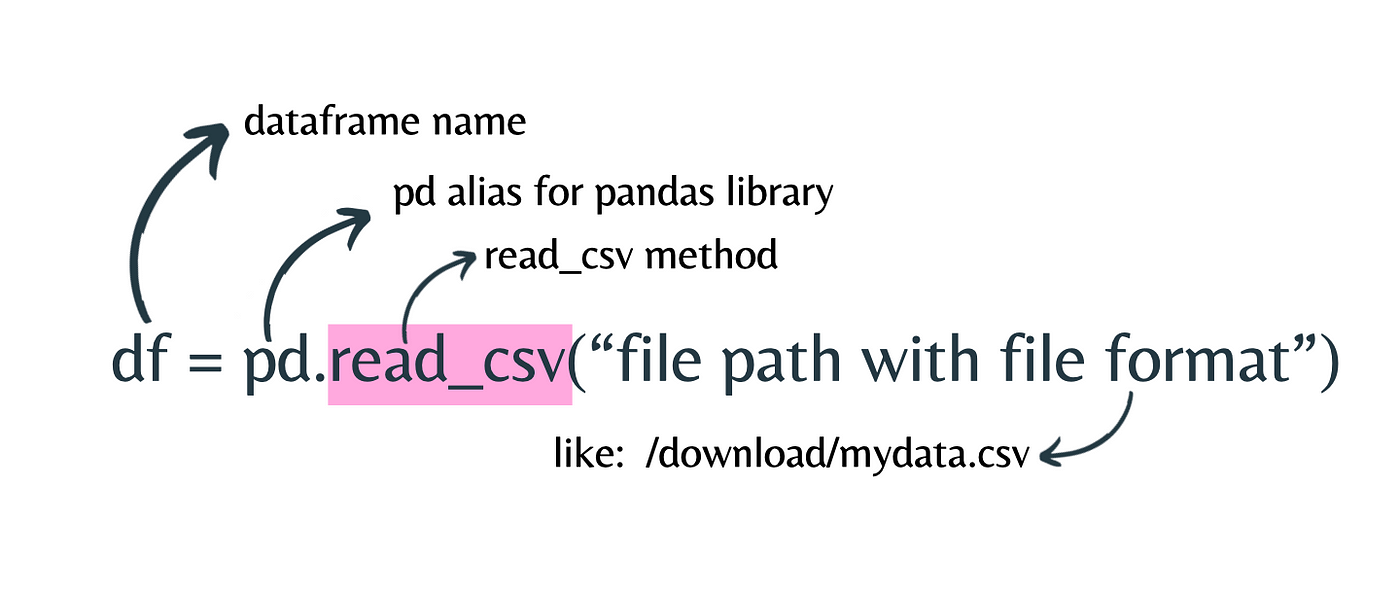

Pandas read_csv() method. Image by the author.

Ifyou are a complete beginner or think of yourself as a beginner to data engineering or data science, then you are totally familiar with the Jupyter notebook and the following command.

#importing dataset

df = pd.read_csv(“path/filename.extension”)

Then you know how to acquire data in CSV (Comma Separated Values). After all, before you can analyze data, you first have to acquire it.

From my experience and most of the tutorials/resources out there, they have always been written with working CSV files. So, when you go to work for a company, and someone gives you data in SQL and/or JSON, then you start to panic and create a self-image of “oh, this is going to be tough.”

But, if you follow this blog, you can easily work with any sort of data in a jupyter notebook.

Remember: Before you can analyze data, you first have to acquire it.

Let’s get started. Data ingestion is the process of pulling data from a variety of sources into your system with the purpose of easily exploring, maintaining, and using it for your own use. Today, you will learn how to transfer data from various formats such as Excel, HTML, SQL, and JSON into a Pandas dataframe object using various Pandas methods (highlighted below as read_csv()).

Pandas read_csv() method. Image by the author.

Step Involved

To transfer such data format, into a Python DataFrame object, the following steps are taken:

- Knowing from where and how you will pull data.

Unless at until you have an idea of where your data is and in what format you will never going to bring it as DataFrame object. After that, if your data is residing on the server, then you will need the path, and URL to the file.

- Use Pandas ‘read_x’ method

For loading and converting CSV data into a DataFrame object, use _read_csv()._You need to replace x with another.

- Validate the data using a DataFrame object.

Next, you need to verify whether the conversion was smooth.

For Excel

Excel sheets (spreadsheets) are still widely used in a variety of domains. Excel is widely used, from developing budgeting strategies to tracking daily student attendance.

- method: read_excel()

- extension/format: .xlsx or .xls

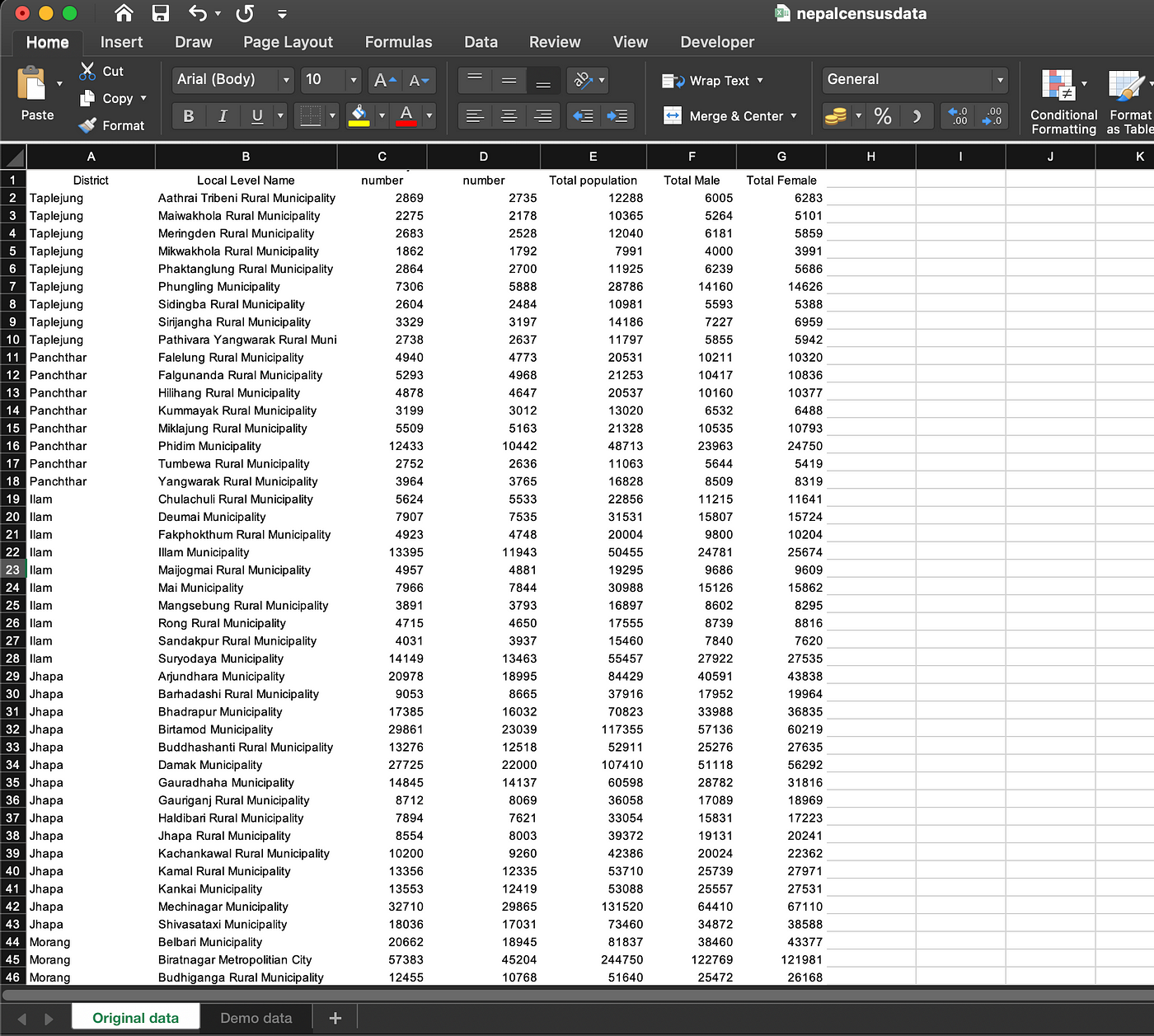

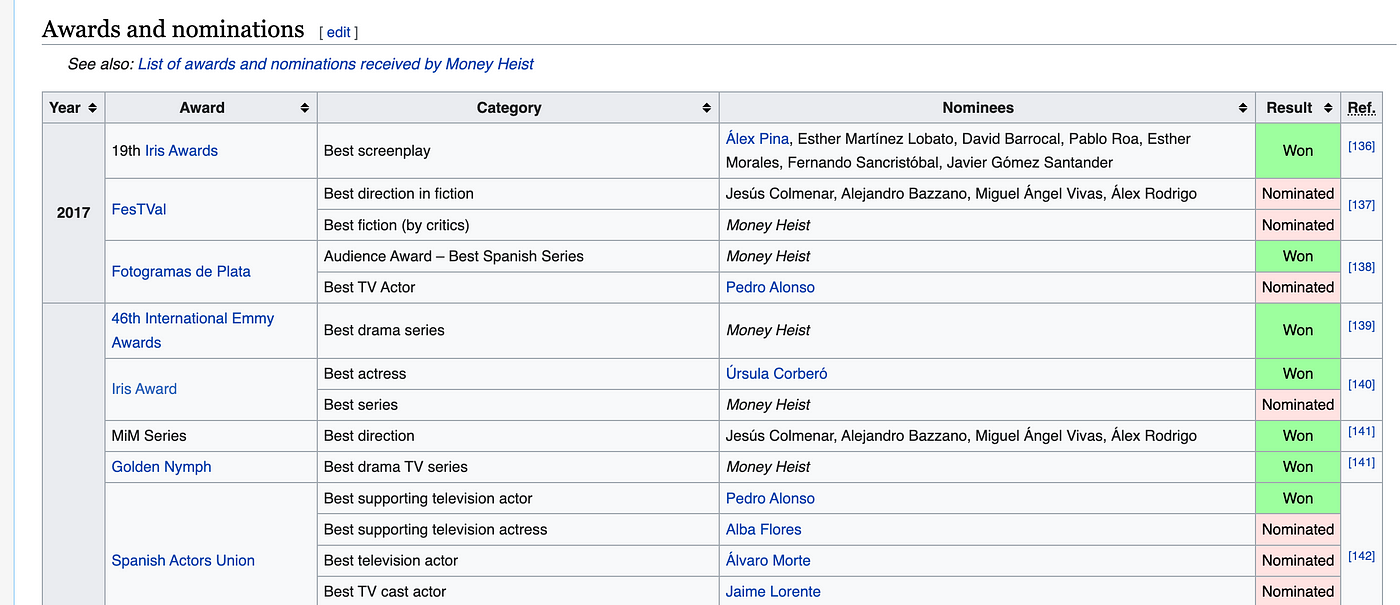

For now, you will be using Preliminary Data of National Population and Housing Census 2021, Nepal dataset from OpenDataNepal. I have saved this dataset as n_epalcensusdata.xlsx_

Snapshot of the Excel dataset. Image by the author. Preliminary Data of National Population and Housing Census 2021, Nepal dataset from OpenDataNepal.

#import the Pandas library

**import pandas#import excel data and store as data_excel dataframe (no need to do df always)

data_excel = pd.read_excel(“n_epalcensusdata.xlsx_”)#view the dataset as pandas dataframe object

**data_excel.head()

Output:

Output obtained from data_excel.head() as Pandas dataframe. Image by the author.

If you have more than one sheet in your workbook then an additional parameter called sheet_name is needed.

data_excel = pd.read_excel(“nepalcensusdata.xlsx”,sheet_name = 0)

data_excel.head()

Pandas read_excel() method. Image by the author.

In our case, we are importing the first sheet, thus it is given 0. The sheet numbers start at 0.

For HTML

If you are familiar with data scrapping (web scraping), then Beautiful Soup, Selenium, and Scrapy are familiar to you. You inspect the HTML structure of the website and pull down the data. If you feel it is sometimes time-consuming or hectic, you can easily do that using the read_html() method.

- method: read_html()

- extension: .html

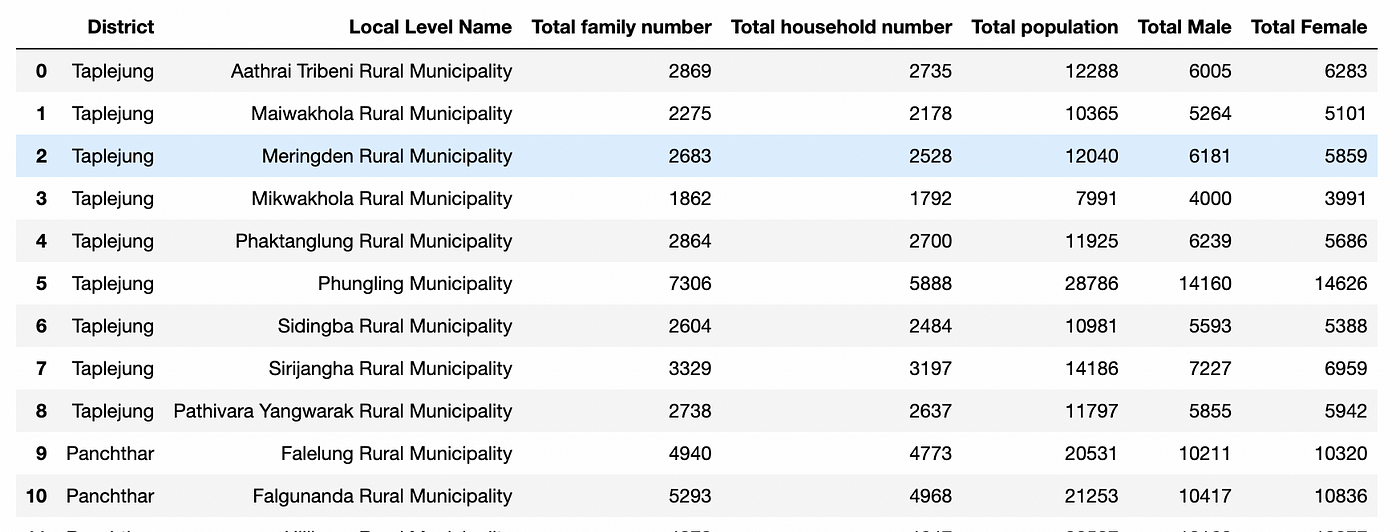

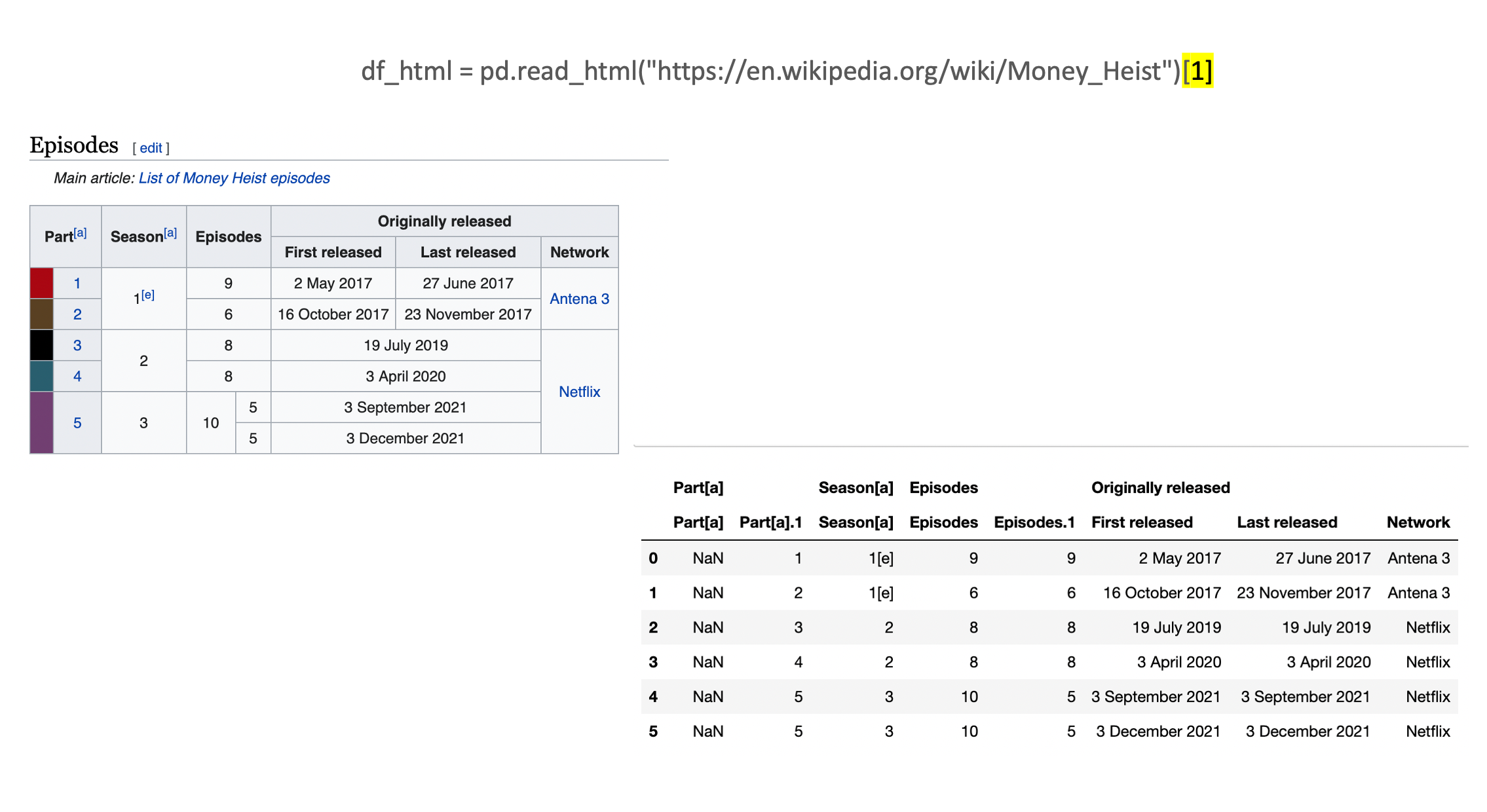

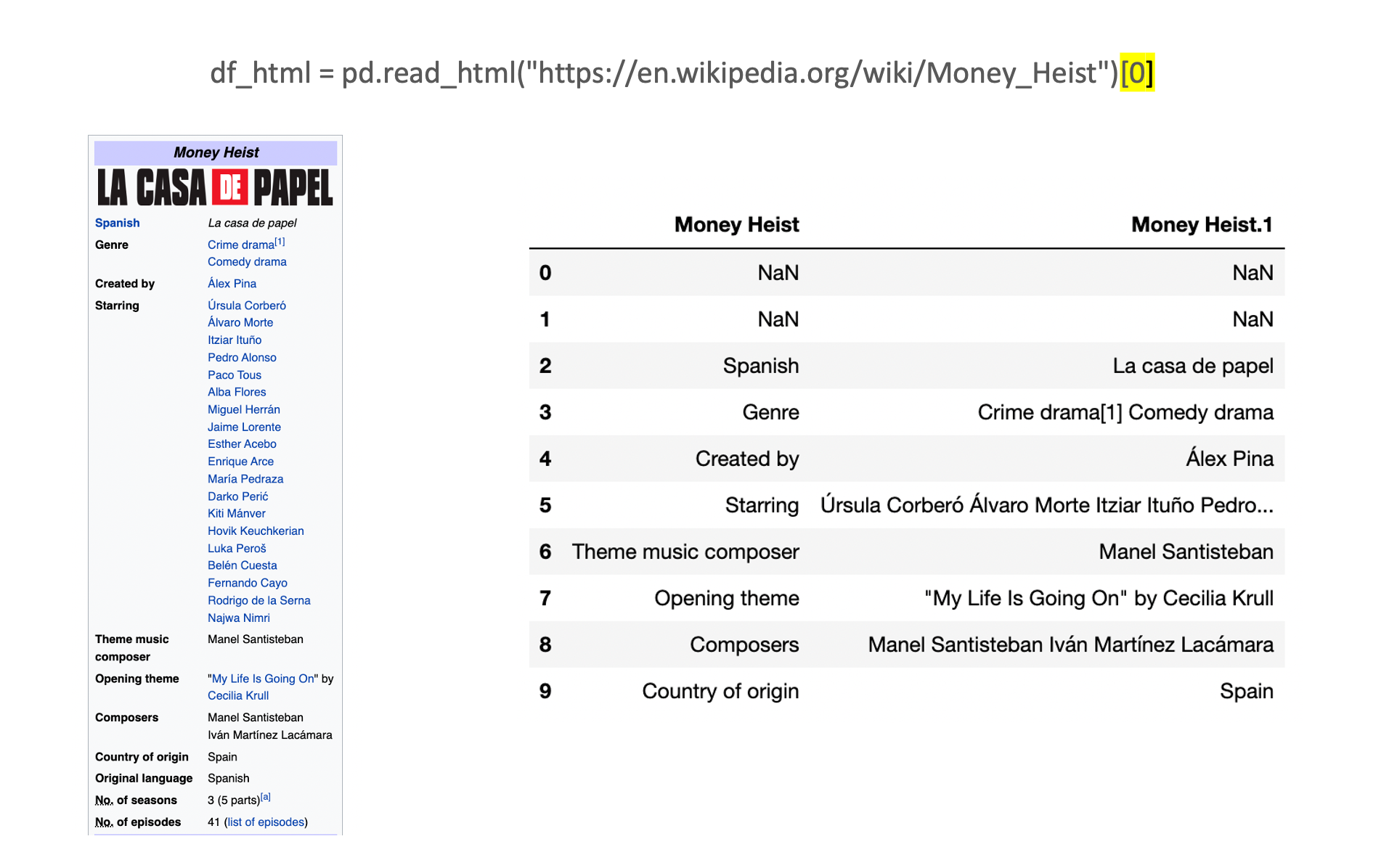

From Wikipedia, we will extract a table from the Money Heist (Spanish: Casa de Papel) series on Netflix from the following url: https://en.wikipedia.org/wiki/Money_Heist

We will be extracting the awards and nominations tables.

Snapshot of Money Heist awards and nominations table from Wikipedia. Image by the author.

#import the Pandas library

import pandas#import html data and store as data_html dataframe

**data_html = pd.read_html(“https://en.wikipedia.org/wiki/Money_Heist”)[1]#view the dataset as pandas dataframe object**

data_html.head()

Output:

Output obtained from data_html.head() about awards and nominations of Netflix series Money Heist as Pandas dataframe. Image by author.

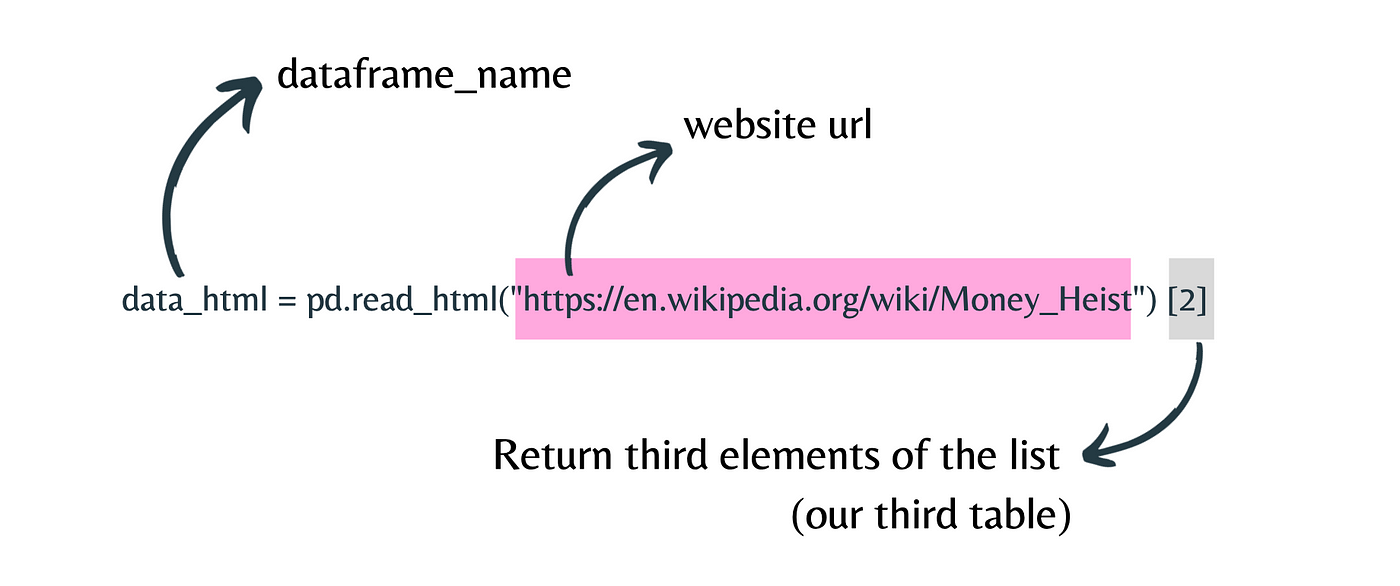

Pandas read_html() method. Image by the the author.

Here, the initial output from the read_html() method is a list, and to convert that list into a dataframe, you have to use [0] at the end. So, here you have used [2] because, on the wikipedia page, you wanted to extract the awards and nominations table. Before this table, there were two more tables. One is called “infobox,” and the next is “Episodes” and to extract the infobox, you need to use [0] and for the episode table [1].

Pandas read_html() method for ingesting different tables. Image by the the author.

Remember that using read_html() is not a simple task.To get the exact table you are looking at, you need to play with your initial result without any frustration.

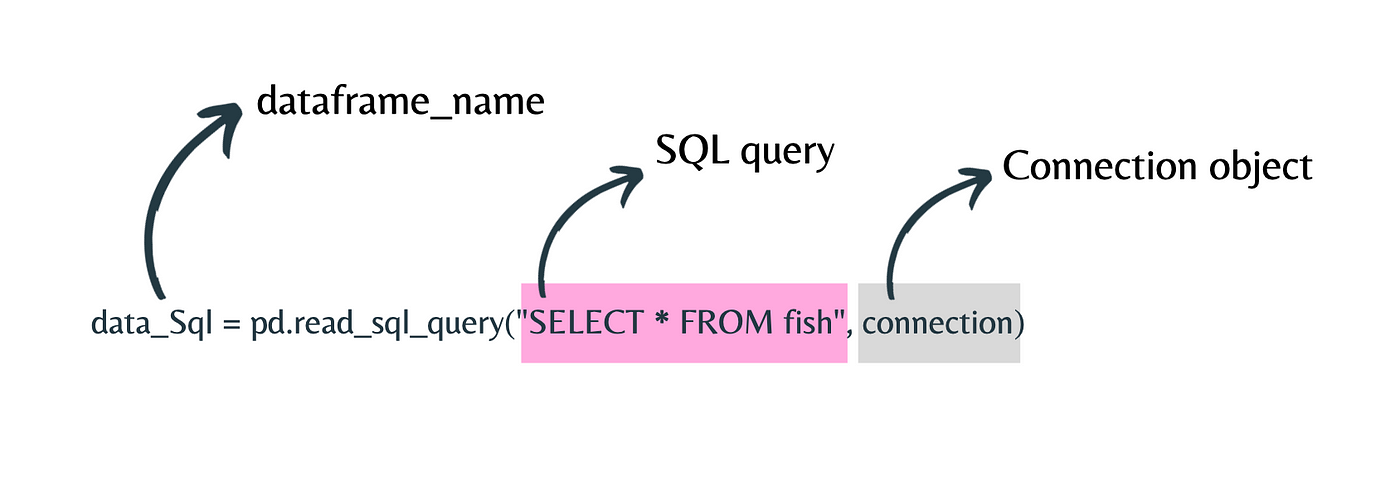

For SQL

Structured Query Language (SQL) is a standard language for storing, manipulating, and retrieving data in databases. Reading data from a database is a two-step process. You first make a way to connect to a database, then query it with SQL and Pandas. In Python, to connect to a SQL server, you need to have either SQLAlchemy connectable or sqlite3.

- method: read_sql_query()

- extension: .db

Unlike other formats, for SQL you will have to write a few more lines of code. You first make a connection with the database. Then you will use Pandas’s read_sql_query() function to convert the output to a dataframe and write a SQL query to import all columns from the database.

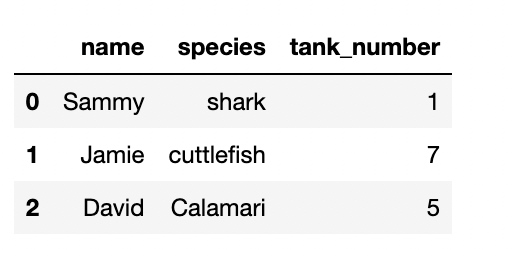

For now, the demo my_aquarium.db is created with a single table called “fish” with the following columns: name, species, tank_number.

#import the required libraries

**import sqlite3

import pandas#prepare a connection object and Pass the Database name as a parameter

connection = sqlite3.connect(“aquarium.db”)#use read_sql_query method and Pass SELECT query and connection object as parameter

data_Sql = pd.read_sql_query(“SELECT * FROM fish”, connection)#**view the dataset as pandas dataframe object

**print(data_Sql)#close the connection object

**connection.close()

Output:

Output obtained from read_sql_query() with fish table as Pandas dataframe. Image by the author.

Pandas read_sql_query() method. Image by the author.

Remember to close the database connection every time you open it.

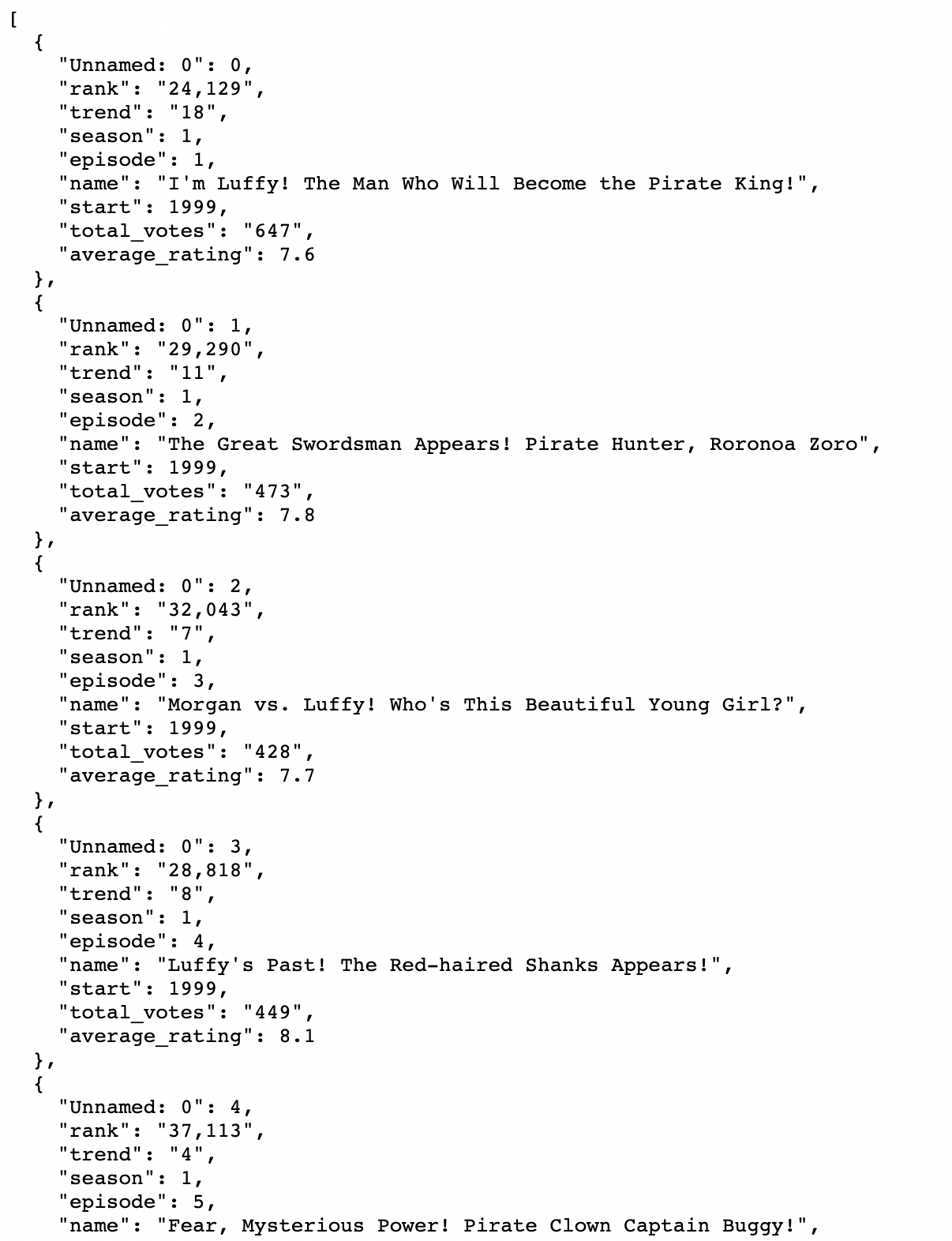

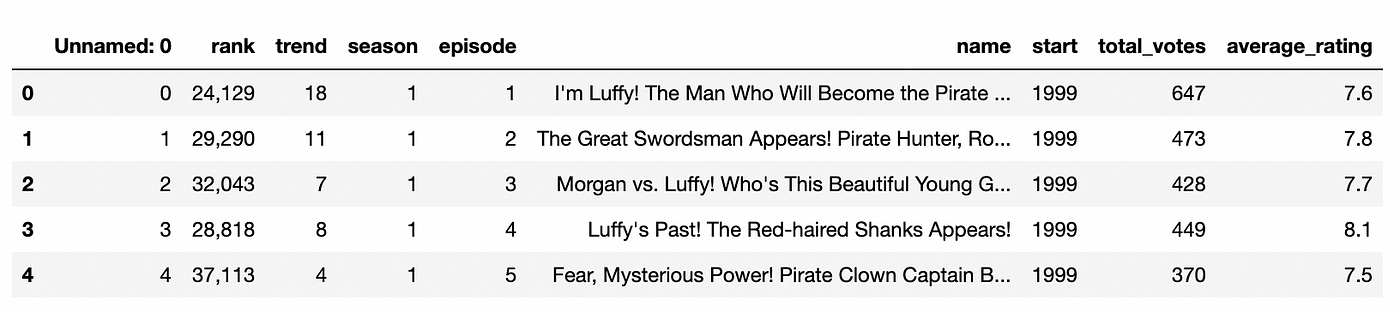

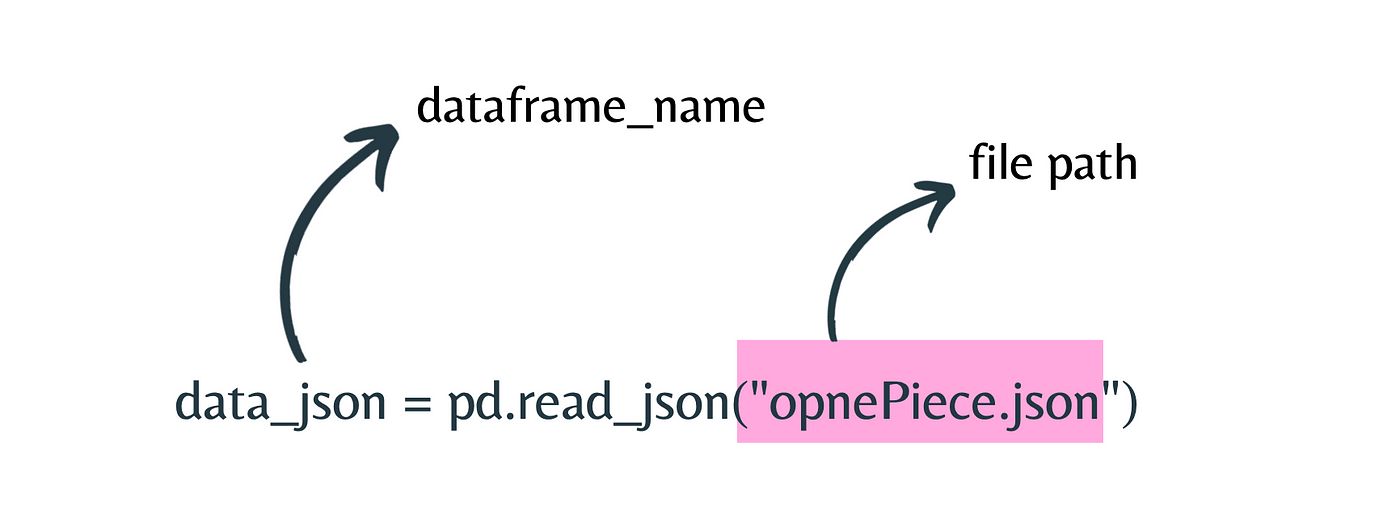

For JSON

JavaScript Object Notation (JSON) is the most common file format preferred by web developers and data engineers. It’s used widely across the internet for almost every single API that you will access.

- method: read_json()

- extension: .json

You will use data from One Piece Anime from Kaggle stored as openPiece.json

Snapshot of One piece anime from Kaggle. Image by author.

#import the Pandas library

import pandas#import html data and store as data_json dataframe

**data_json = pd.read_json(“opnePiece.json”)#view the dataset as pandas dataframe object**

data_json.head()

Output:

Output obtained from read_json() as Pandas DataFrame. Image by the author.

Pandas read_json() method. Image by the author.

In this manner, you can obtain data in a variety of formats.

Remember: Acquiring data is just the beginning.

Of course, acquiring data is just the beginning. After this, you need to explore data and play with it before you get deep into a data science project. But if you need ideas on where to go next, go with exploratory data analysis for begineers.

PS: I was able to delve deeper into this because of the Data Fellowship opportunity provided by Code For Nepal.I’d like to express my gratitude to Code for Nepal for providing a fantastic opportunity to the Nepali community, as well as the DataCampfor believing in Code for Nepal.

If you have any queries regarding the article or want to work together on your next data engineering or data science project, ping me on LinkedIn.

Originally published at Mala’s Medium blog